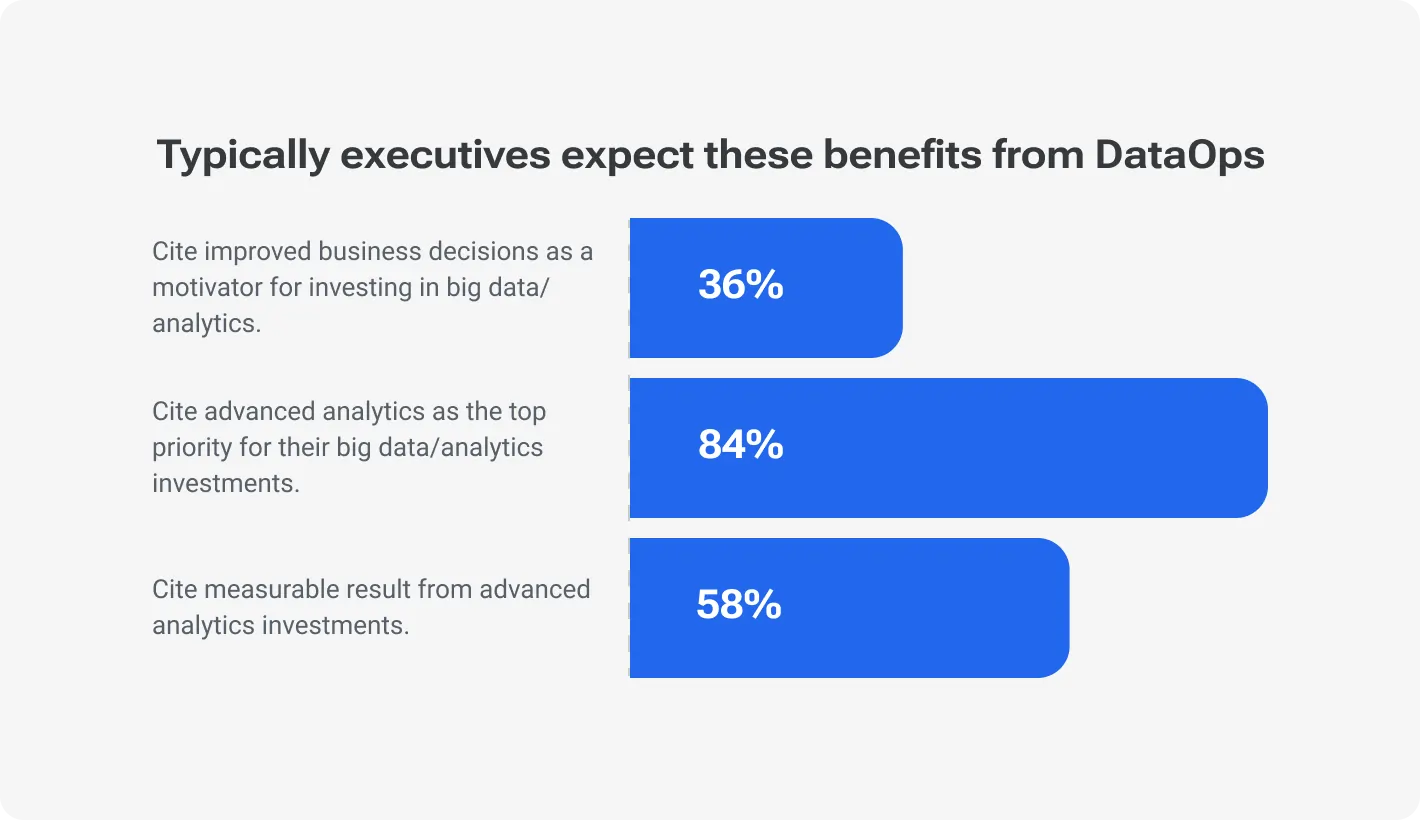

Interesting fact: only 13% of data science projects go to the production stage. However, the amount of data continues to grow. McKinsey Global Institute report estimates that the volume of data created, captured, replicated, and consumed globally has grown tenfold from 2010 to 2020.

The demand for data-driven products is also skyrocketing. By 2025, the global data analytics market is expected to reach USD 105.8 billion. The thing is that the traditional development approaches often struggle with managing large volumes of data.

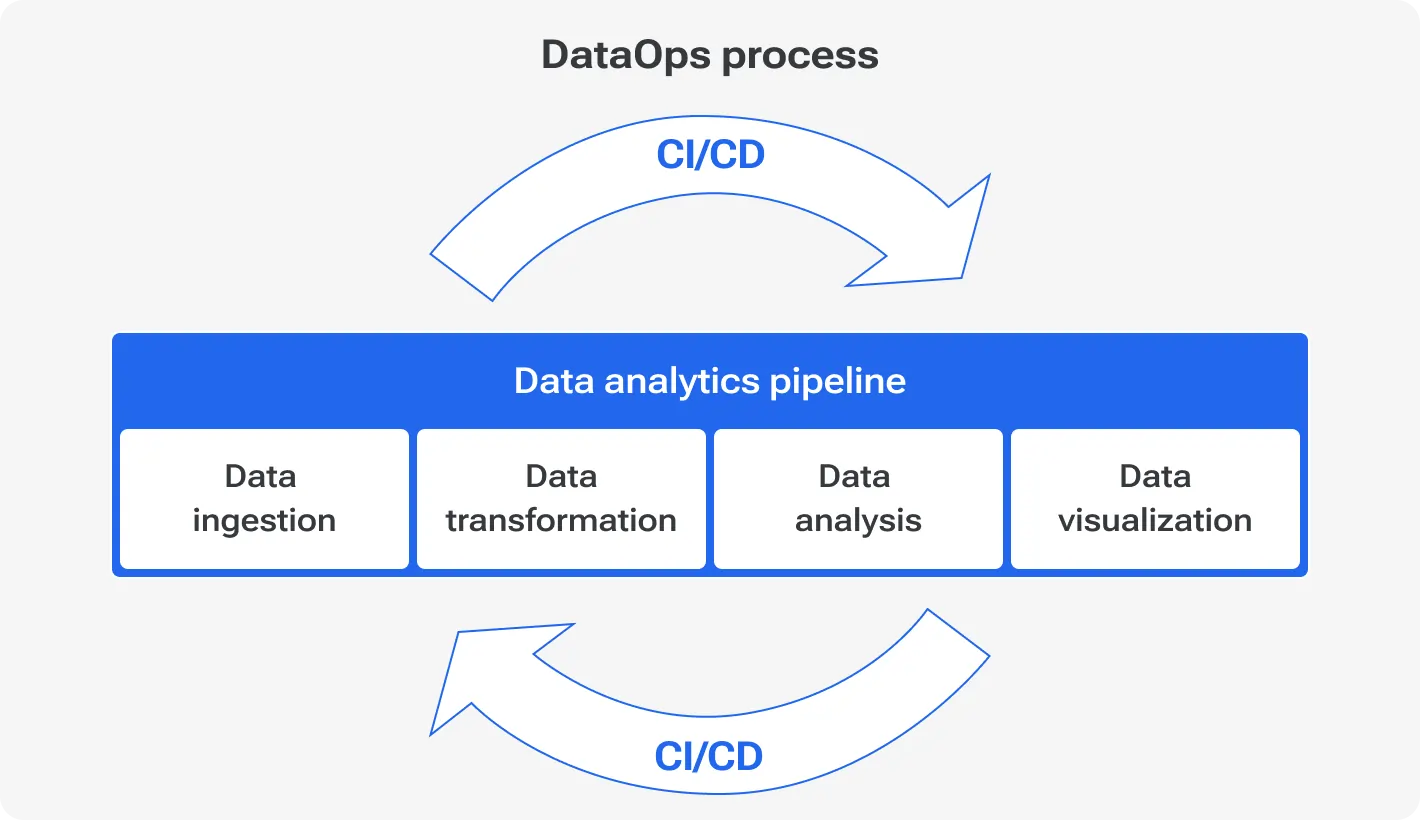

This is where the DataOps methodology comes into play. DataOps adapts DevOps principles for data analytics and data product development. Continuous integration, continuous delivery (CI/CD), and agile methodologies to data workflows are the core principles of such an approach. Basically, Data Science Ops aims to streamline and enhance the lifecycle of data-driven product development.

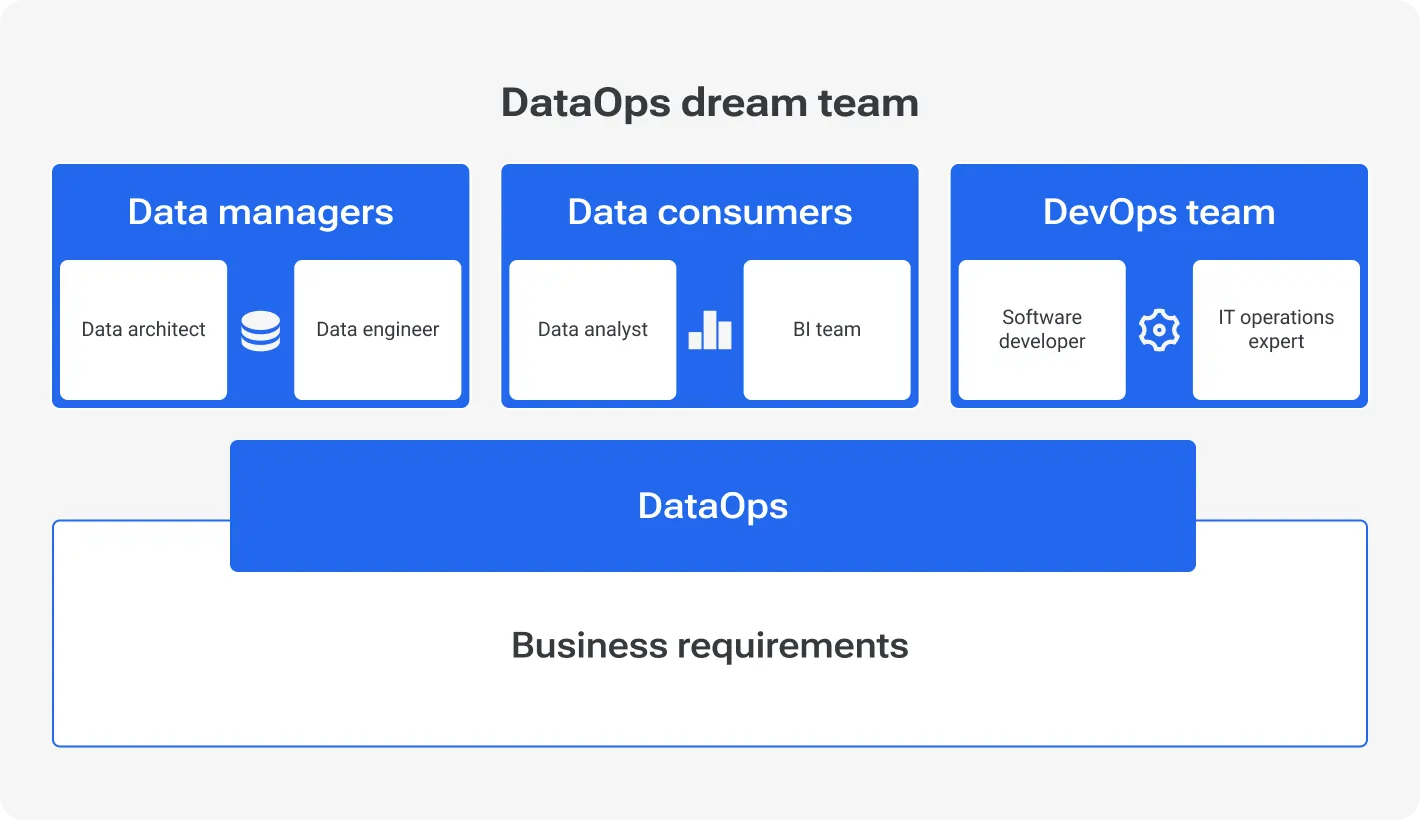

It improves collaboration between data engineers, data scientists, and other stakeholders and emphasizes automation, monitoring, and real-time feedback to ensure that data products are delivered efficiently and accurately.

The best answer to what is DataOps lies in its main leg-up — high-quality data products. By reducing the time and effort required to manage data workflows, DataOps allows teams to focus on extracting valuable insights from data.

Close the gap between data and decision-making with data management to drive innovation up to 75%

DevOps: Building the software delivery fast lane

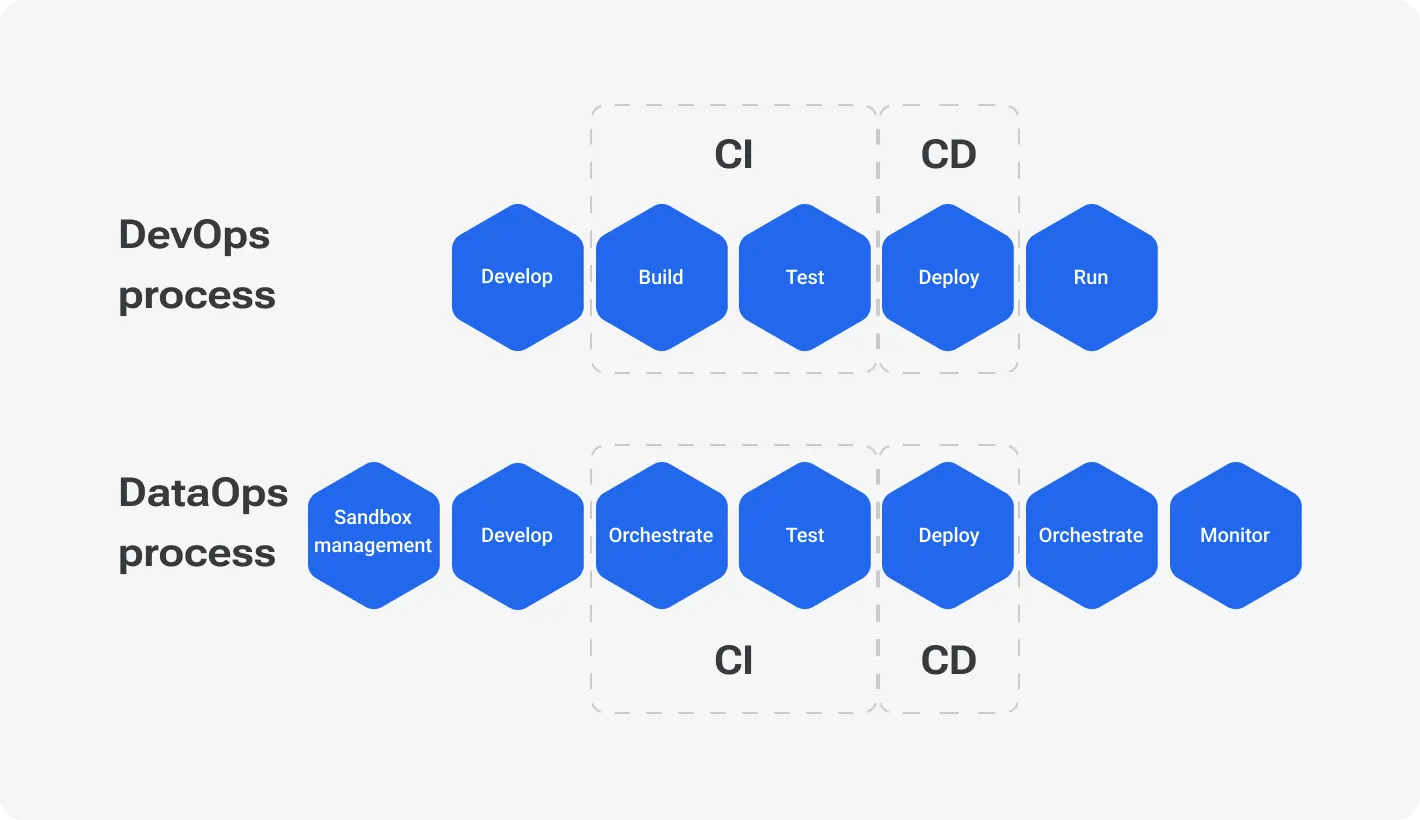

DataOps is a revising of DevOps (or rather, another way of using ABCs). DevOps is widely used across IT teams as a collaborative approach to software development. Here's what makes it tick:

Makes diverse teams closer: Traditionally, development and operations teams functioned in separate silos. Developers would write code, and operations handled deployment and maintenance. DevOps bridges this gap as teams work together throughout the entire development lifecycle. Which makes the transition from development to production smooth.

Automating the mundane: Typically, devs repeat routine tasks a lot — building, testing, and deploying code. DevOps emphasizes the automation of these processes. This frees up valuable time for developers to focus on innovation and problem-solving while also minimizing human error in deployments.

CI/CD for speed: DevOps champions continuous integration and delivery (CI/CD) practices. With CI, code changes are constantly integrated and tested, ensuring early detection and resolution of bugs. CI/CD pipelines then automate the delivery of these tested changes to production.

Get a comprehensive understanding of the DevOps

DevOps success in streamlining processes

Without any pathos, DevOps has transformed how we develop and deliver software. Collaboration allows for breaking down silos between teams which leads to faster problem resolution and more efficient workflows.

DevOps analytics and automation in DevOps reduce the time and effort needed for market research and manual tasks. This way, teams can focus more on strategic work, rest assured the software is error-free and safe.

CI/CD practices make software development more agile. Code changes are continuously tested and integrated. This reduces the risk of bugs, too, and ensures that software updates are ready for deployment. Consequently, companies can respond quickly to market changes and customer needs.

The success of DevOps in streamlining software development is evident. Companies that adopt DevOps see faster delivery times, improved software quality, and better team collaboration. It lays the groundwork for methodologies like DataOps, which extend these principles to the data and analytics domain.

DataOps: The same playbook, new data ball

While DevOps excels in the software development arena, DataOps takes those core principles and applies them specifically to building and delivering data-driven products. It’d be banal to say it’s a game-changer. However, it actually is. That’s why:

Automation: DataOps relies on automation too. Yet, it focuses on automating data pipelines instead of software delivery. This includes data ingestion, transformation, and delivery processes.

Collaboration: Communication gaps are among the most dangerous things in any relatively big company. The proof of the pudding is in the eating: the average US company loses over USD 62M per year due to miscommunication. DataOps evens these comms issues out and ensures close collaboration between data engineers, data scientists, and product development teams.

Continuous Integration and Delivery (CI/CD): CI/CD practices are used, too, to ensure that data flows smoothly through the pipelines. Continuous integration means that data changes are regularly tested and merged. Continuous delivery ensures that data is always ready for analysis.

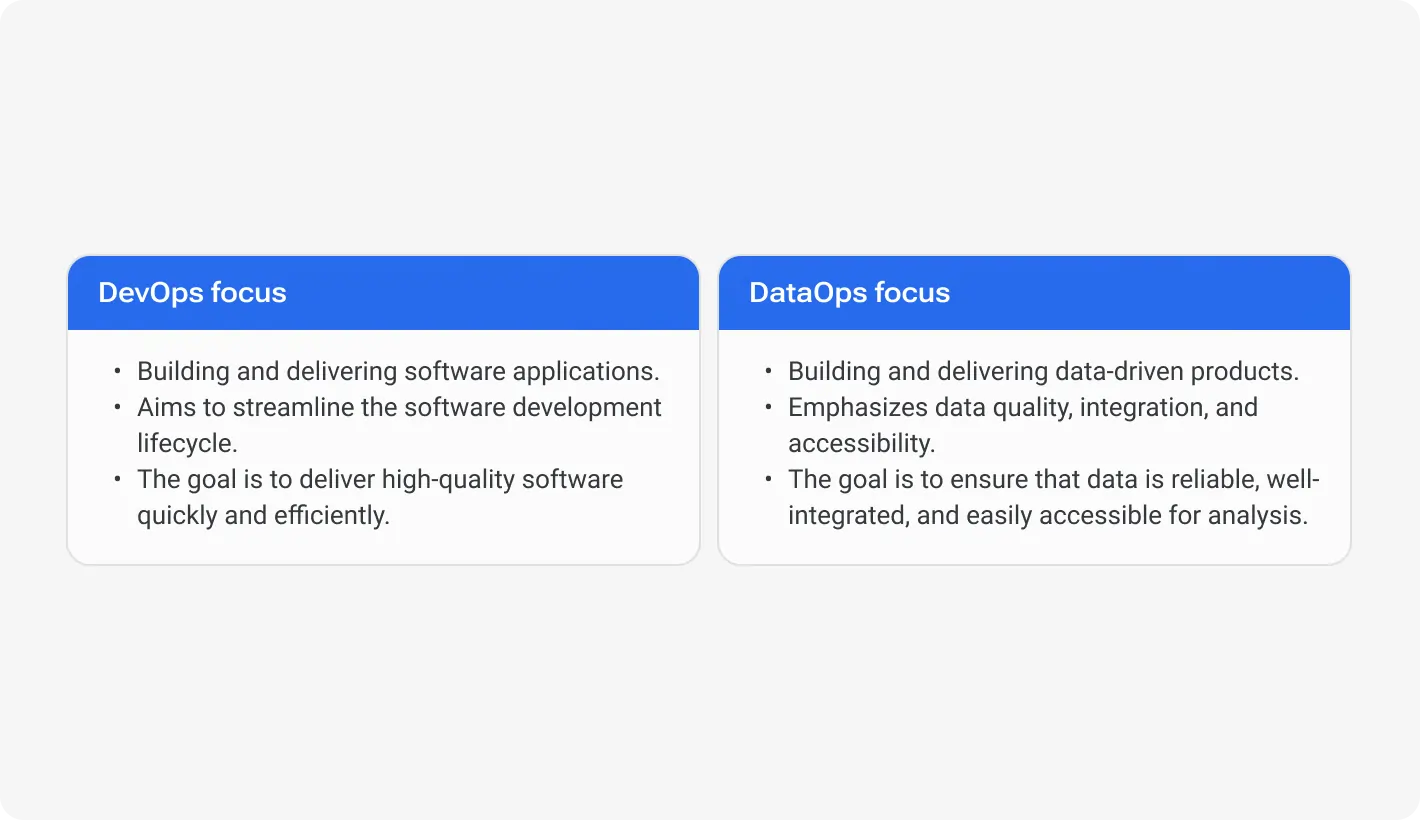

Key differences in focus

DevOps and DataOps share similar principles, that’s right. However, their focus areas differ.

Core functionalities of DataOps

Before DataOps implementation it’d be better to learn its core functionalities.

Automating data pipelines: DataOps automates the flow of data from its source to its destination. Typically the rough flow looks like “data ingestion — transformation — delivery”. Automated pipelines ensure that data is processed quickly and accurately.

Quality control: DevOps for data emphasizes data governance and quality control through setting up rules and standards for data management. Data Scientists validate data quality at every stage of the pipeline to ensure that data is accurate, consistent, and secure.

Collaboration (again!): The DataOps approach creates the environment for effortless collaboration between data engineers, data scientists, and product development teams. This way, data is used effectively and drives business insights. It also helps in identifying and solving data-related problems quickly.

Brief recap

DataOps extends the principles of DevOps to the world of data and analytics. It adapts automation, collaboration, and CI/CD practices to meet the needs of data teams. It always focuses on data quality, integration, and accessibility. These peculiarities allow DataOps to help businesses build reliable and insightful data-driven products.

Get a leg-up with DataScienceOps

Building a house of cards is not a breezy challenge: Single flimsy card, riddled with errors, can topple the entire structure. The same story with data-driven products. Inaccurate or unreliable data can lead to misleading insights and ultimately, a product that fails to meet user needs.

DataOps tackles this challenge head-on: The approach has several important advantages to streamline the analytics pipeline and empower companies. Let’s run through them.

Improved data quality

DataOps automates data pipelines, ensuring data is ingested, transformed, and delivered without manual intervention. The automation process is typically based on data quality checks at every stage. For example, data validation rules can catch errors and inconsistencies early, ensuring the data used for analysis is reliable and accurate. Thinking back to our card-building metaphor, it is like using high-quality building materials for your data house of cards so that your foundation is sturdy.

❗Implementing real-time data monitoring tools can catch and correct errors instantly. This proactive approach prevents bad data from propagating through the system.

Faster time-to-insights

DataOps streamlines workflows, which speeds up the delivery of insights. Automated data pipelines and continuous integration practices mean data is processed quickly. This rapid processing allows data scientists to analyze data and generate insights faster.

For example, a retail company can quickly identify trends in customer behavior and adjust marketing strategies in real time.

❗Use data visualization tools that integrate directly with your automated pipelines. This setup allows immediate visual analysis, speeding up the insight-generation process.

Enhanced collaboration

We all already understand that the question is not placed like DevOps vs. DataOps. They are different methodologies with a similar core approach, and both are crucial.

DataOps sets up a data-driven culture by improving communication across teams. Data engineers, data scientists, and product developers work together more effectively. This teamwork ensures that data flows smoothly from collection to analysis. Teams can identify and resolve issues quickly, leading to better data use and more informed decision-making.

❗Pencil in regular check-ins for cross-functional teams. Alignment is essential when it comes to pros with different specializations.

Increased agility and innovation

DataOps enables rapid iteration and adaptation based on data feedback. DataOps takes a leaf out of the DevOps playbook and this way revolutionizes how companies push out software updates. Imagine going from waiting months to release a single update to doing it in hours or even minutes.

Back in 2013, Amazon was cranking out 23,000 updates every day — that's one update every three seconds — and they got each one out the door in just a few minutes, all thanks to DevOps.

Another conditional instance: A financial services company can adjust its risk models in response to market fluctuations, providing more accurate predictions.

❗Adopt a modular data architecture. This allows teams to update or replace parts of the system without disrupting the entire workflow.

Reduced costs

Automation in DataOps can reduce operational costs associated with manual data management. McKinsey once shared, companies that automate data processes can reduce data management costs by up to 40%. So, automate repetitive tasks so that your teams can focus on more strategic activities, increasing overall efficiency.

❗Invest in cloud-based data solutions that offer scalable storage and compute power. This flexibility reduces the need for expensive on-premises infrastructure and maintenance.

Do you need to optimize storage costs as the management of large datasets has become prohibitively expensive?

Brief recap

DataOps brings numerous benefits to analytics product development. By improving data quality, speeding up time-to-insights, enhancing collaboration, increasing agility, and reducing costs, DataOps helps businesses leverage their data more effectively. Implement these practices to transform how your organization manages and uses data. Chances are you’ll get better outcomes and a stronger competitive edge.

From prototype to production: Putting DataOps into action

DataOps is a powerful tool that organizations can leverage to build real-world data-driven products. Imagine a telecommunications company aiming to reduce customer churn by predicting which customers are likely to leave. They decide to implement DataOps to build a robust churn prediction model. This example is a conditional pic showing how DataOps can streamline the development of analytics products from start to finish.

Another example from telecom.

BT Group, a British telecom company, did exactly the same — they leveraged DataOps to predict customer churn and improve customer retention strategies. Automated data pipelines and collaborative workflows allowed them to significantly reduce churn rates.

Teamwork throughout the development cycle

Data acquisition:

Data engineers set up automated pipelines to collect data from various sources, such as customer interactions, billing records, and support tickets. They use tools like Apache NiFi or Informatica to ensure seamless data integration.

Tip: The real-time data keeps the data current which, in turn, enhances your flexibility in decision-making. This also helps to make timely predictions and interventions.

Data preparation:

The raw data is then cleaned and transformed. Data engineers apply data quality checks to ensure accuracy. They use tools like Talend or AWS Glue for ETL (Extract, Transform, Load) processes.

Tip: Implement data versioning to keep track of changes and ensure reproducibility.

Model development:

Data scientists take the cleaned data and develop predictive models using machine learning algorithms. They use platforms like Databricks or Jupyter Notebooks for this purpose.

Tip: Use automated machine learning (AutoML) tools to expedite model development and enhance model performance.

Model deployment:

Once the model is ready, it is deployed into production. Data engineers and data scientists collaborate to integrate the model into the company's operational systems using tools like Docker and Kubernetes.

Tip: Employ continuous integration and continuous deployment (CI/CD) practices for models, just like in software development, to ensure smooth and rapid updates.

Monitoring and maintenance:

After deployment, the model's performance is continuously monitored. Any issues are addressed promptly to ensure the model remains effective. Tools like Prometheus or Grafana are used for monitoring.

Tip: Set up automated alerts to detect and respond to anomalies in real time.

Some tips and tricks

Use a shared workspace: Banal advice yet reliable. Platforms like GitHub or GitLab enable collaborative coding and version control, ensuring everyone has access to the latest changes.

Use a single communication channel: Slack, Microsoft Teams, or even own-built messenger — choose to your liking.

Create a feedback loop: Review model performance regularly and incorporate feedback from all stakeholders to continuously improve the product.

Brief recap

With DataOps any company can build, deploy, and maintain their churn prediction model. This approach enhances the quality and reliability of the model and fosters a collaborative and agile environment, inevitably leading to better decision-making and improved business outcomes.

To sum it up

Imagine a world where data isn't locked away in silos but flows freely between teams. This is the power of DataOps methodology.

DataOps bridges the gap between data and development teams, creating a single environment for collaboration and streamlining workflows. Core functionalities like automated data pipelines, data governance practices, and the mentioned focus on collaboration empower teams to move faster and drive more business value.

With DataOps tools, you're not just building products — you're building a data-driven product factory. Clean, reliable data is readily available, insights are generated continuously, and steady improvement is woven into the development process. Consequently — analytics products that deliver real business value, outpace the competition and keep you at the forefront of the data revolution.

Explore how DataOps can revolutionize your analytics product development