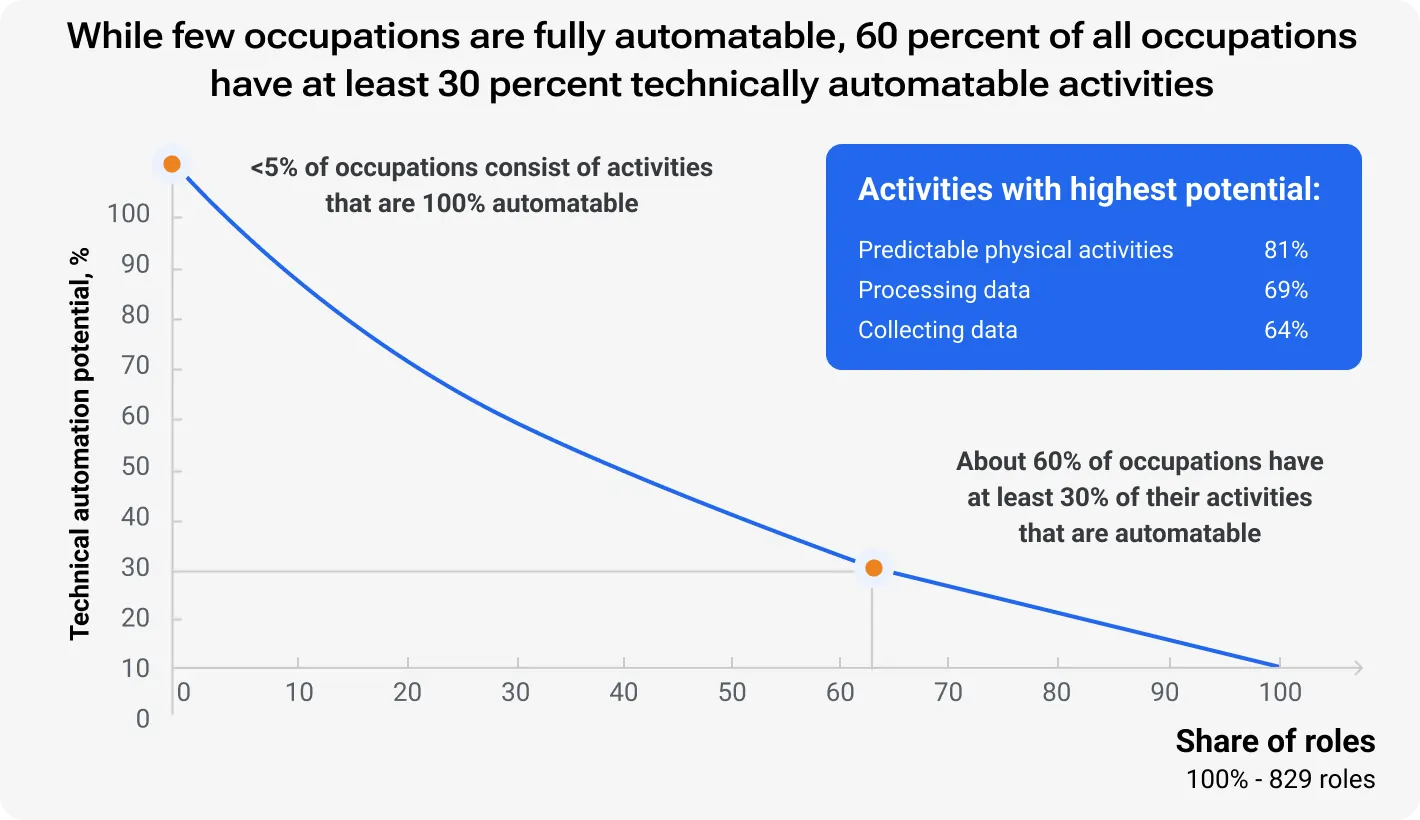

Chances are we'll all be swamped by the amount of data very soon. And the data engineering teams will be the most overwhelmed as about 60% of data engineers spend more than half their time on manual, repetitive tasks.

However, there is an app for that — data processing automation. O'Reilly states that 40% of companies use automation and AI to improve their data processes: many companies rely on process and data automation in the industry.

What is the purpose of data automation? It simplifies and accelerates data workflows, using tools and scripts to perform repetitive tasks. Like data extraction, transformation, and loading (ETL). Automation helps teams save time, reduce errors, and focus on more strategic work.

Different types of tasks are suitable for automation in data pipelines. For instance, data ingestion can be automated to pull data from various sources into a centralized location. Data transformation tasks, like cleaning and formatting data, can also be automated. These processes ensure data consistency and readiness for analysis. Additionally, data loading can be automated to move processed data into storage systems, making it available for further use.

Close the gap between data and decision-making with data management to drive innovation up to 75%

And data automation software plays a crucial role in this process. Apache NiFi, Talend, and Apache Airflow allow data engineers to design and manage automated workflows. These tools enable seamless data movement and transformation across different platforms and systems.

What is automation in data engineering?

Imagine a data engineer meticulously copying and pasting data from various sources, painstakingly cleaning and transforming it before finally loading it into a system for analysis. This repetitive, manual approach is probably the most common bottleneck for any company when analyzing data. Data management automation offers a smarter way to work.

Simply put, it’s when a data pro uses tools and techniques to automate repetitive tasks within data pipelines. Such a move enhances efficiency and reduces manual effort, freeing time for more strategic activities.

Data automation examples

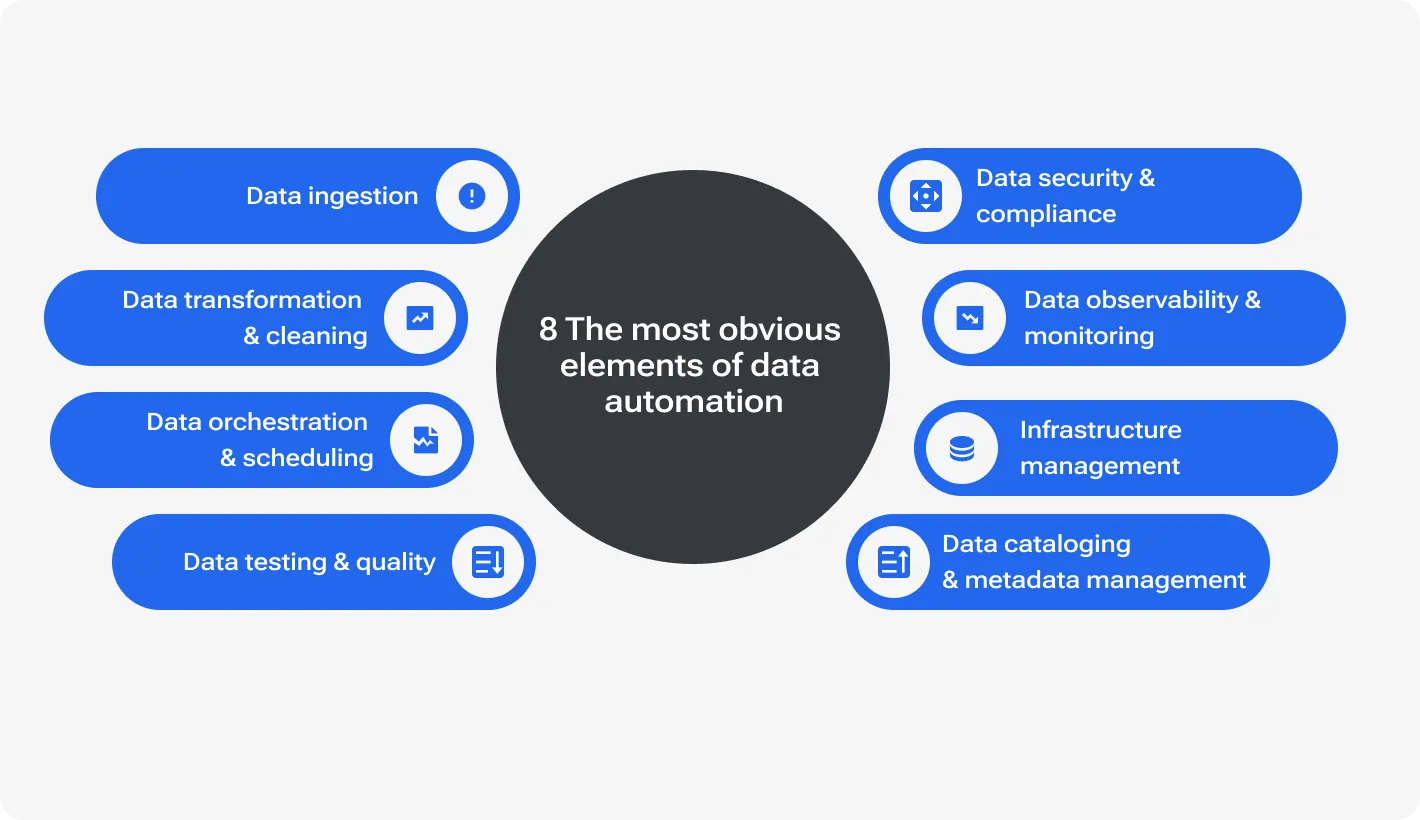

Eight the most obvious elements of data automation:

Let’s run through some particular tasks when it comes to automation.

1/ Data extraction:

Automation can simplify the extraction of data from various sources such as databases, APIs, and cloud services. Apache NiFi and Talend serve to set up automated data extraction workflows that pull data from multiple sources into a centralized system.

2 /Data transformation and cleaning:

Automation tools can handle tasks like data validation, formatting, and deduplication. Data pros often use Apache Spark and Python scripts for this purpose.

3 /Data loading:

Once transformed and cleaned, the data needs to be loaded into a destination system for analysis. Data automation tools can automate this process as well, ensuring the data arrives at its designated data warehouse, data lake, or other analytics platform. The process is akin to delivery trucks, transporting the prepared information to its final destination for further analysis.

4/ Scheduling and orchestration:

Data pipelines often involve complex workflows with multiple stages. To align all the workflows, data specialists use special tools to schedule the execution of particular tasks according to a defined cadence. Some such tools are Cron jobs or managed services like AWS Glue can trigger data pipelines at specified intervals. This ensures that data processing tasks run reliably and on time.

Why automate? Unveiling the benefits

Increased efficiency

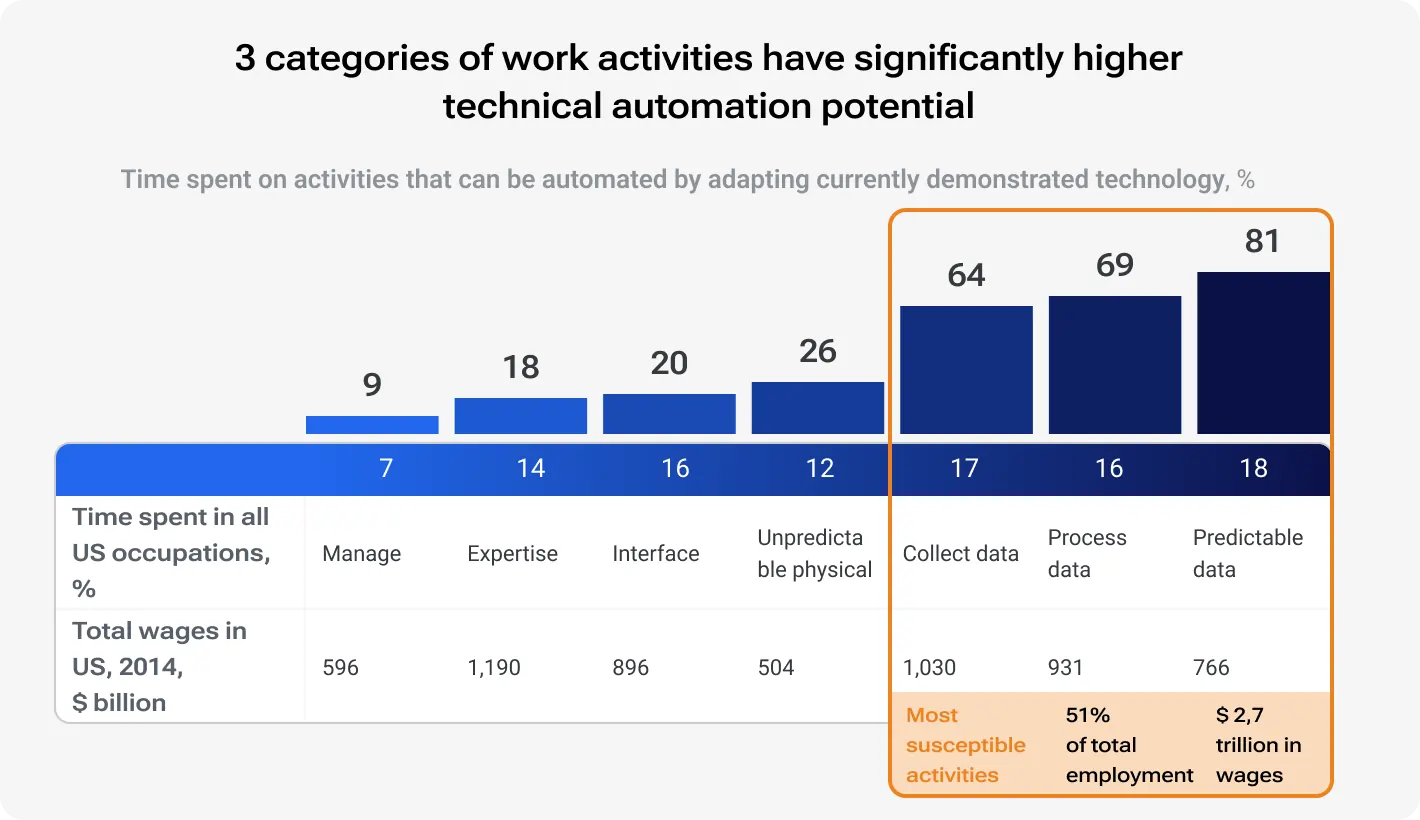

Automating repetitive tasks frees up data engineers to focus on more strategic initiatives. We already mentioned the research of time wasted on repetitive tasks. There is another one: data specialists dedicate an average of 37% of their workweek to manual data-wrangling tasks. Free up valuable time for data engineers to dive deeper into data modeling, advanced analytics, and exploring new technologies. Just imagine how much value can bring such a move.

Improved data quality

Undoubtedly, manual data processing has its benefits, like the helicopter view and personal experience of the data specialist. However, they still remain humans… and can make mistakes.

Automating data pipelines reduces this risk and ensures consistency in data processing. Manual data handling can compromise data quality. Automation tools apply data validation and cleaning processes uniformly, ensuring that the data remains accurate and reliable.

Faster access

The workflow in almost any team includes the moment when data engineers must approve access whenever someone needs it. But to do this right, they need the lowdown on why the access is needed and when to pull the plug.

Sicu manual gatekeeping slows down the data pipeline, and this, in turn, affects fast and precise decision-making.

Solution is simple: shift to automated data access controls, leverage self-service portals, and seamless access management. This way, data engineering teams can smooth out the kinks in the data access process, slashing the manual workload and amping up efficiency.

Enhanced scalability

When it comes to mounting up information, automated workflows are very helpful in handling growing volumes without significant manual effort — constantly, manual processes become untenable. Automation tools like Apache Spark and Apache NiFi can scale to process large datasets efficiently, ensuring that data pipelines remain robust and performant.

Faster time-to-insights

We all know how long it lasts to align in vision in a particular case and to get approval from the company’s stakeholders. In this case, automation is a perfect aider — it accelerates data processing and the delivery of insights to ExCo members.

For example, a retail company can use automated data pipelines to analyze customer behavior and optimize their marketing campaigns accordingly. This, in turn, makes them quicker on the uptake.

Reduced costs

Automation can potentially reduce operational costs associated with manual data engineering tasks. According to McKinsey, companies that automate their data processes with AI can reduce operational costs by 20% on average. This cost-saving comes from reducing the need for manual data management and increasing operational efficiency.

Do you need to optimize storage costs as the management of large datasets has become prohibitively expensive?

Data automation services even out repetitive tasks within data pipelines and improve efficiency and accuracy. Data extraction, transformation, loading, and scheduling can be and even must be automated — you need your key specialists for more strategic tasks, innit?

Start implementing by identifying repetitive tasks in your data workflows. Use Apache NiFi for data extraction, Apache Spark for data transformation, and Apache Airflow for scheduling and orchestration.

Data automation maturity levels

Many companies in the US and the entire industry are prioritizing data accessible and usable across various internal levels. From data engineers to absolutely any involved user within a company. This initiative democratizes data access and cultivates a data-centric culture, accelerating informed decision-making processes across departments.

Yet, this can be a marker of maturity, and not all companies reached that readiness level. Notwithstanding, it’s a crucial part of assessing current practices and identifying areas for improvement. The framework below outlines the progression from basic to advanced automation, providing a roadmap for growth.

Common stages of automation adoption

1/ Ad-hoc automation:

Obviously, at the very beginning, manual processes will dominate. Teams occasionally use scripts or tools for specific tasks but don’t have a cohesive automation strategy. In this situation, your specialists will address issues as they arise rather than preventing them.

2/ Structured manual process:

Here, things start to get a little more organized. Data team defined processes and established them for data pipelines. They have a playbook for data pipelines, but the execution relies heavily on manual effort which witnesses early-stage maturity. Automation is applied on and off which inevitably brings about inefficiency.

3/ Standardized automation:

At this stage, the company has graduated from manual drudgery. Automation becomes a core principle. The team automated key tasks within the data pipeline. They also use tools and scripts to automate these key processes to ensure that data flows smoothly and predictably. This stage marks the beginning of a more strategic approach to automation.

4/ Optimized automation:

This is the data automation endgame as, at this level, the company uses advanced automation techniques. Teams are continuously monitoring workflows and optimizing for performance. They also leverage data automation tools and technologies to automate complex processes. Continuous integration and delivery (CI/CD) practices are applied to data pipelines, updates run rapidly and error-free.

Moreover, such companies adapted their policies to employ automation and evolved their training and education in general to help employees adjust to new workflows. This is not to mention income support, transition support, and safety nets.

Understanding your maturity level

Knowing your team's data automation maturity level helps define goals and develop a roadmap for further automation. Assess where you currently stand to identify gaps and set realistic objectives. For example, if your team operates at the Structured Manual Process level, your next goal might be to standardize automation across core tasks.

-

Assess current practices: Conduct a thorough review of your current data automation practices to determine your maturity level.

-

Set goals: Define clear, achievable goals for moving to the next maturity level.

-

Develop a roadmap: Create a detailed plan outlining the steps needed to reach your goals, including necessary tools, training, and process changes.

-

Benchmark your team: Maturity models provide a common framework for comparing your team's automation practices with industry standards or other data engineering teams.

How to automate your data pipelines: A practical guide

There is no single endeavor or significant achievement without practical steps. Start automating your data pipelines today to significantly boost efficiency and accuracy tomorrow. There is a step-by-step guide to help you get started.

1. Identify tasks for automation

Analyzing your data pipelines is the first thing to run. Look for tasks that involve high manual effort but are relatively simple to automate:

Highly manual: Focus on tasks that consume a significant amount of a data engineer's time through repetitive steps. These are prime candidates for automation. Simply ask around your data team to identify these time stealers. Or you can share a brief internal survey to strike two birds with one stone: identify time-consuming data tasks and save additional info for tracking progress.

Low complexity: Prioritize tasks that follow well-defined rules and logic. Complex tasks with decision-making elements might initially require human intervention, but as automation matures, these can be revisited for potential automation opportunities.

Common tasks examples: data extraction, transformation, loading, and scheduling. Prioritize these tasks to create a clear roadmap for automation.

2. Choose the right tools

We all live in a time when, in any case, we’ll have a multi-option choice. This applies to data automation tools as well. Here are three popular ones:

Apache Airflow: This tool is excellent for orchestrating complex workflows. It allows you to define tasks and their dependencies using Python code. Airflow’s main benefit is its robust scheduling and monitoring capabilities.

Luigi: Developed by Spotify, Luigi is ideal for building long-running pipelines. It excels at handling dependencies between tasks and ensuring that data flows smoothly through the pipeline. Luigi’s main feature is its ease of integration with other tools.

Prefect: Prefect is a modern tool that focuses on simplicity and scalability. It offers an intuitive interface and powerful features like dynamic mapping and state management. In regard to the main benefit, probably, it is the ability to handle dynamic workflows and real-time data.

3. Develop reusable workflows

Modular and reusable workflows can become your leg-up over your competitors. This approach makes it easier to maintain and scale your automation efforts.

Break down your pipelines into smaller components that can be reused across different projects. Recall kids with Lego blocks—reusable components can similarly be easily assembled to create complex workflows. The best task options to experiment with are modules for data extraction, transformation, and loading.

4. Implement error handling and monitoring

Even the best-laid plans can encounter hiccups. Set up mechanisms to handle them and monitor the performance of your automated workflows. For this reason, there are many logging and alerting tools that track the status of your tasks. If an error occurs, your system will automatically retry the task or notify the team. Some of the renowned similar instruments are Prometheus and Grafana — they can provide valuable real-time insights into your workflows.

5. Integrate with existing systems

Generic yet important advice — ensure that your automated workflows integrate seamlessly with your existing data infrastructure. Use APIs and connectors to link your automation tools with databases, data warehouses, and other systems. This helps maintain data consistency and enables smooth data flow across your company.

Meaningful summary

If you’re short on time to read the section, screen this.

Follow these 5 steps to effectively automate your data pipelines:

-

Identify tasks for automation: Focus on high-effort, low-complexity tasks.

-

Choose the right tools: Select tools like Apache Airflow, Luigi, and Prefect based on your needs.

-

Develop reusable workflows: Create modular components for easy maintenance.

-

Implement error handling and monitoring: Set up robust mechanisms for tracking and resolving issues. Consider Prometheus and Grafana for this purpose.

-

Integrate with existing systems: Ensure seamless connectivity with your current infrastructure.

Learn how to drive maximum business value from big data development

Beyond the tools: Best practices for successful automation

Case studies teach us many helpful things. However, the most important is probably the ability to spot best practices. In our situation — to help make your transition smoother and easier. Here are some key considerations to keep in mind.

Version control

Let’s say, you've implemented a new data transformation step in your automated pipeline. However, it introduces an unexpected error. Without version control, reverting to a previous working version becomes a messy ordeal. That’s why it’s crucial to implement version control for code and data pipelines. It’s your magic wand for tracking changes and facilitating rollbacks.

Use tools like Git for that purpose: if an update to a data pipeline causes a failure, you can quickly revert to a previous stable version. This ensures that you can recover from issues without disrupting workflows.

Another example: Imagine you automated a data extraction process. After a recent update, the pipeline starts failing due to a new bug. With version control, you can easily roll back to the previous version that worked fine, fixing the issue without significant downtime.

Testing and validation

Automated workflows don’t mean to run without testing. And you should do it before deployment to ensure accuracy and reliability. Implement unit tests, integration tests, and end-to-end tests to cover all aspects of your workflows. Testing helps identify and fix issues early.

Tip: Use continuous integration (CI) tools like Jenkins or CircleCI to automate your testing processes. Every time you make a change to your code, automation tests will check the lines, spotting errors — without additional efforts from your end.

Documentation

If you want to help Future You, make sure your documentation of automated workflows is clear and comprehensive. Confluence, Notion, and Markdown files in Git repositories can help streamline all issues and processes involved in doc creation and maintenance. To maintain documentation in a structured form, include clear descriptions, flow diagrams, and configuration details.

Tip: It's generally better to teach your tech pros to document their workflows. This approach ensures that the documentation is accurate. However, for larger teams or complex systems, hiring a tech writer might be beneficial to maintain high-quality documentation. Especially, if the hired pro had tech/coding experience before.

Continuous improvement

Data processing automation needs additional attention in the first stages. Review and optimize automated workflows regularly based on performance data and user feedback. Use monitoring tools to gather insights into workflow performance. Look for bottlenecks and inefficiencies and make adjustments as needed.

Tip: Schedule regular review sessions to analyze workflow performance. Involve your team in these reviews to gather diverse perspectives and identify areas for improvement.

The future of automation: Emerging trends and tools

A couple of last years proved that no one knows the future. However, there are some trends we see in data automation services. Here's a glimpse into some of them.

Machine learning for automated data pipelines

Machine learning is probably one of the most influential trends for this reason. ML enables automated data pipelines so they can learn from data patterns and improve over time.

Gartner predicts 70% of organizations will use machine learning in data pipelines by 2025. Look at Spotify; they use machine learning to automate data processing for personalized music recommendations. The other day the company rolled out AI-formed playlists. This approach has significantly improved their data processing efficiency and user experience, irrespective of some offensive prompting issues.

Explore the latest trends in data engineering to maintain a competitive edge

Integration of Artificial Intelligence for workflow optimization

ChatGPT-4o can deeply analyze spreadsheets, slides, and other data “containers”. Gemini can create tables based on your input and reformat it into pitch decks.

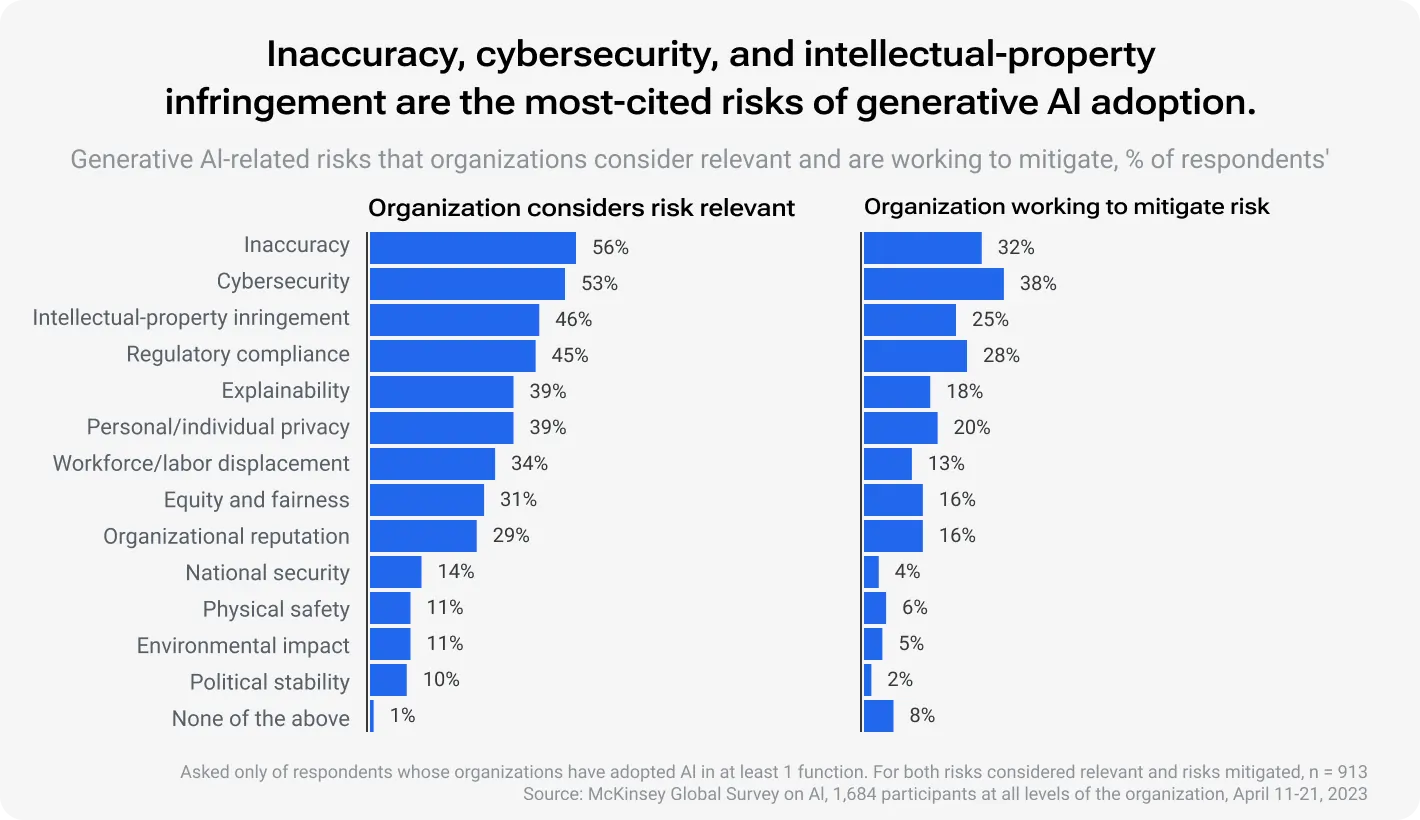

Indeed, AI-powered tools filled the global data landscape. Artificial intelligence can analyze data pipeline performance, identify bottlenecks, and even suggest self-healing mechanisms. For this reason, there was a pretty interesting 2023 McKinsey report, stating that "AI can automate repetitive tasks, optimize workflows, and identify patterns that humans might miss." According to this report, AI-driven workflow optimization can increase productivity by up to 40%.

Of course, there is no flawless tool, but still, AI brings about many advantages.

Cloud-native automation tools and platforms

Cloud-native automation tools offer scalability, flexibility, and ease of use. These features make them ideal for handling large data volumes and complex workflows. The tools each data pro should know of:

AWS Glue: A fully managed ETL service that makes it easy to prepare and load data for analytics. AWS Glue automates data extraction, transformation, and loading processes, reducing the need for manual intervention.

Google Cloud Dataflow: A fully managed service for stream and batch processing. Google Cloud Dataflow simplifies the development of data pipelines by providing a unified programming model. It offers automatic scaling and performance optimization, ensuring efficient data processing.

Azure Data Factory: A cloud-based data integration service that allows you to create, schedule, and orchestrate data workflows. Azure Data Factory supports a wide range of data sources and provides built-in connectors, making it easy to integrate and automate data processes across different platforms.

Leveraging machine learning, AI, and cloud-native platforms allows data engineering teams to achieve higher efficiency, scalability, and innovation in their workflows.

To sum it up

Without any pathos, data automation software is a game-changer. Among other benefits, such an approach increases efficiency, improves data quality, enhances scalability, faster time-to-insights, and compliance. This is not to mention reduced costs.

Data processing automation evens out repetitive tasks within data pipelines so that teams can focus on more strategic initiatives. A typical implementing process looks like this:

identifying tasks for automation — choosing the right tools — developing reusable workflows — implementing error handling and monitoring — integrating with existing systems.

Understand the stages of your data automation maturity, from ad-hoc processes to optimized automation, and follow best practices like version control, thorough testing, clear documentation, and continuous improvement to hit the best result possible.

What about trends? Chances are that machine learning for automated data pipelines, AI for workflow optimization, and cloud-native tools will shape future processes and data automation.

Make your data reliable now